Profile

|

Dr. Andrea Bönsch |

Dr. Andrea Bönsch is deputy head of the Virtual Reality and Immersive Visualization group (VRVis) and co-head of the division "Computational Science & Engineering" at the IT Center. In addition to her management responsibilities, she serves as a senior researcher specializing in Social VR, where she leads a dedicated team focused on interactions with virtual agents and avatars.

She received her Master's degree in 2015 at RWTH Aachen while working part-time in the Virtual Reality and Immersive Visualization group focusing on navigation techniques through immersive virtual environments. For her doctoral degree, which she successfully defended in June 2024, she conducts research in the field of Social VR by integrating virtual agents as advanced, emotional human interfaces into VR applications, focusing on the aspect of joint locomotion of social groups, i.e., groups of virtual agents and users who interact directly and plausible with other group members while standing or moving in the virtual scene. Her dissertation entitled "Social Wayfinding Strategies to Explore Immersive Virtual Environments" is available at the RWTH publications. She has a special interest in training simulations and support systems in which virtual agents fulfill the roles of peers, coaches, or communication partners.

orcid.org/0000-0001-5077-3675

orcid.org/0000-0001-5077-3675

View/Hide the details.

working with Research Assistant Professor Ari Shapiro

View/Hide the details.

View/Hide the details.

(master thesis by Julian Koska, 2024, in preparation)

(master thesis by Konstantin Kühlem, 2024, in progress)

(bachelor thesis by Julius Hündlings, 2024)

(master thesis by Xiang Ye, 2023)

(bachelor thesis by Lukas Zimmermann, 2022, poster)

(bachelor thesis by Robin Schäfer, 2021)

(master thesis by Julian Staab, 2022)

(bachelor thesis by Till Sittart, 2022, poster)

(bachelor thesis by Nikjas Hartmann, 2022)

(bachelor thesis by Kalina Daskalova, 2022)

(master thesis by Daniel Rupp, 2021)

(master thesis by Xinyu Xia, 2021)

(bachelor thesis by Steffen Krüger, 2021)

(master thesis by Radu-Andrei Coanda, 2021, poster)

(bachelor thesis by Daniel Vonk, 2021)

(bachelor thesis by Danny Post, 2021)

(bachelor thesis by Katharina S. Güths, 2021, poster)

(bachelor thesis by David Hashem, 2021, paper)

(bachelor thesis by Johanna Tolzmann, 2020)

(master thesis by Filip Kajzer, 2020)

(master thesis by Marvin Kuhl, 2020)

(bachelor thesis by Sebastian Jan Barton, 2019/2020, extended abstract)

(bachelor thesis by Alexander R. Bluhm, 2019/2020, paper)

(master thesis by Marcel Jonda, 2018, workshop paper)

(bachelor thesis by Jan Hoffmann, 2016, workshop paper)

(master thesis by Robert Trisnadi, 2016, poster)

(internship by Robert Trisnadi, 2016)

(bachelor thesis by Jan Schnathmeier, 2016, GI presentation)

(bachelor thesis by Timothy A.W. Blut, 2016)

(internship by Yannick Donners, 2016)

(bachelor thesis by David Gilbert, 2016)

(bachelor thesis by Jonathan Wendt, 2014, co-supervised)

(master thesis by Dennis Scully, 2014, co-supervised, publication)

(bachelor thesis by Joachim Herber, 2013, co-supervised)

View/Hide the details.

Publications

Beyond Words: The Impact of Static and Animated Faces as Visual Cues on Memory Performance and Listening Effort during Two-Talker Conversations

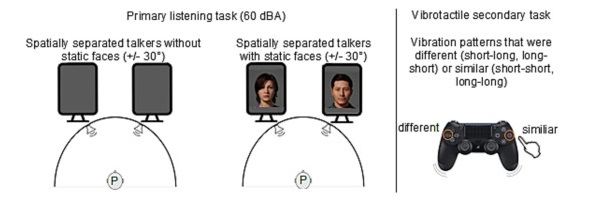

Listening to a conversation between two talkers and recalling the information is a common goal in verbal communication. However, cognitive-psychological experiments on short-term memory performance often rely on rather simple stimulus material, such as unrelated word lists or isolated sentences. The present study uniquely incorporated running speech, such as listening to a two-talker conversation, to investigate whether talker-related visual cues enhance short-term memory performance and reduce listening effort in non-noisy listening settings. In two equivalent dual-task experiments, participants listened to interrelated sentences spoken by two alternating talkers from two spatial positions, with talker-related visual cues presented as either static faces (Experiment 1, n = 30) or animated faces with lip sync (Experiment 2, n = 28). After each conversation, participants answered content-related questions as a measure of short-term memory (via the Heard Text Recall task). In parallel to listening, they performed a vibrotactile pattern recognition task to assess listening effort. Visual cue conditions (static or animated faces) were compared within-subject to a baseline condition without faces. To account for inter-individual variability, we measured and included individual working memory capacity, processing speed, and attentional functions as cognitive covariates. After controlling for these covariates, results indicated that neither static nor animated faces improved short-term memory performance for conversational content. However, static faces reduced listening effort, whereas animated faces increased it, as indicated by secondary task RTs. Participants' subjective ratings mirrored these behavioral results. Furthermore, working memory capacity was associated with short-term memory performance, and processing speed was associated with listening effort, the latter reflected in performance on the vibrotactile secondary task. In conclusion, this study demonstrates that visual cues influence listening effort and that individual differences in working memory and processing speed help explain variability in task performance, even in optimal listening conditions.

@article{MOHANATHASAN2026106295,

title = {Beyond words: The impact of static and animated faces as visual cues on memory performance and listening effort during two-talker conversations},

journal = {Acta Psychologica},

volume = {263},

pages = {106295},

year = {2026},

issn = {0001-6918},

doi = {https://doi.org/10.1016/j.actpsy.2026.106295},

url = {https://www.sciencedirect.com/science/article/pii/S0001691826000946},

author = {Chinthusa Mohanathasan and Plamenna B. Koleva and Jonathan Ehret and Andrea Bönsch and Janina Fels and Torsten W. Kuhlen and Sabine J. Schlittmeier}

}

Objectifying Social Presence: Evaluating Multimodal Degraders in ECAs Using the Heard Text Recall Paradigm

Embodied conversational agents (ECAs) are key social interaction partners in various virtual reality (VR) applications, with their perceived social presence significantly influencing the quality and effectiveness of user-ECA interactions. This paper investigates the potential of the Heard Text Recall (HTR) paradigm as an indirect objective proxy for evaluating social presence, which is traditionally assessed through subjective questionnaires. To this end, we use the HTR task, which was primarily designed to assess memory performance in listening tasks, in a dual-task paradigm to assess cognitive spare capacity and correlate the latter with subjectively-rated social presence. As a prerequisite for this investigation, we introduce various co-verbal gesture modification techniques and assess their impact on the perceived naturalness of the presenting ECA, a crucial aspect fostering social presence. The main study then explores the applicability of HTR as a proxy for social presence by examining its effectiveness under different multimodal degraders of ECA behavior, including degraded co-verbal gestures, omitted lip synchronization, and the use of synthetic voices. The findings suggest that while HTR shows potential as an objective measure of social presence, its effectiveness is primarily evident in response to substantial changes in ECA behavior. Additionally, the study also highlights the negative effects of synthetic voices and inadequate lip synchronization on perceived social presence, emphasizing the need for careful consideration of these elements in ECA design.

@ARTICLE{11271093,

author={Ehret, Jonathan and Schüppen, Jonas and Mohanathasan, Chinthusa and Ermert, Cosima A. and Fels, Janina and Schlittmeier, Sabine J. and Kuhlen, Torsten W. and Bönsch, Andrea},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Objectifying Social Presence: Evaluating Multimodal Degraders in ECAs Using the Heard Text Recall Paradigm},

year={2025},

volume={},

number={},

pages={1-15},

doi={10.1109/TVCG.2025.3636079}

}

Heard-Text Recall and Listening Effort under Irrelevant Speech and Pseudo-Speech in Virtual Reality

Introduction: Verbal communication depends on a listener’s ability to accurately comprehend and recall information conveyed in a conversation. The heard-text recall (HTR) paradigm can be used in a dual-task design to assess both memory performance and listening effort. In contrast to traditional tasks such as serial recall, this paradigm uses running speech to simulate a conversation between two talkers. Thereby, it allows for talker visualization in virtual reality (VR), providing co-verbal visual cues like lip-movements, turn-taking cues, and gaze behavior. While this paradigm has been investigated under pink noise, the impact of more realistic irrelevant stimuli, such as speech, that provide temporal fluctuations and meaning compared to noise, remains unexplored.

Methods: In this study (N = 24), the HTR task was administered in an immersive VR environment under three noise conditions: silence, pseudo-speech, and speech. A vibrotactile secondary task was administered to quantify listening effort.

Results: The results indicate an effect of irrelevant speech on memory and speech comprehension as well as secondary task performance, with a stronger impact of speech relative to pseudo-speech.

Discussion: The study validates the sensitivity of the HTR in a dual-task design to background speech stimuli and highlights the relevance of linguistic interference-by-process for listening effort, speech comprehension, and memory.

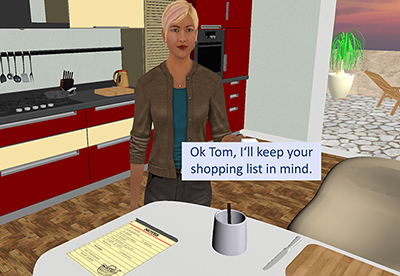

Fostering Engagement through a Latency-Optimized LLM-based Dialogue System for Multimodal ECA Responses

Interactions with Embodied Conversational Agents (ECAs) are an integral part of many social Virtual Reality (VR) applications, increasing the need for free, context-sensitive conversations characterized by latency-optimized and multimodal ECA responses. Our presented methodology consists of three interdependent steps: We first present a holistic framework driven by a Large Language Model (LLM), which integrates existing technologies into a modular and extendable system that is developer-friendly and suitable for diverse use-cases. Building on this foundation, our second step comprises streaming-based optimizations that effectively reduce measured response latency, thereby facilitating real-time conversations. Finally, we conduct a comparative analysis between our latency optimized LLM-driven ECA and a conventional button-based Wizard-of-Oz (WoZ) system to evaluate performance differences in user engagement. Our insights reveal that users perceive our LLM-driven ECA as significantly more natural, competent, and trustworthy than their WoZ counterparts, despite objective measures indicating slightly higher latency in technical performance. These findings underscore the potential of LLMs to enhance engagement in ECAs within VR environments.

Audiovisual angle and voice incongruence do not affect audiovisual verbal short-term memory in virtual reality

Virtual reality (VR) environments are frequently used in auditory and cognitive research to imitate real-life scenarios. The visual component in VR has the potential to affect how auditory information is processed, especially if incongruences between the visual and auditory information occur. This study investigated how audiovisual incongruence in VR implemented with a head-mounted display (HMD) affects verbal short-term memory compared to presentation of the same material over traditional computer monitors. Two experiments were conducted with both these display devices and two types of audiovisual incongruences: angle (Exp 1) and voice (Exp 2) incongruence. To quantify short-term memory, an audiovisual verbal serial recall (avVSR) task was developed where an embodied conversational agent (ECA) was animated to speak a digit sequence, which participants had to remember. The results showed no effect of the display devices on the proportion of correctly recalled digits overall, although subjective evaluations showed a higher sense of presence in the HMD condition. For the extreme conditions of angle incongruence in the computer monitor presentation, the proportion of correctly recalled digits increased marginally, presumably due to raised attention, but the effect size was negligible. Response times were not affected by incongruences in either display device across both experiments. These findings suggest that at least for the conditions studied here, the avVSR task is robust against angle and voice audiovisual incongruences in both HMD and computer monitor displays.

@article{ Ermert2025,

doi = {10.1371/journal.pone.0330693},

author = {Ermert, Cosima A. AND Yadav, Manuj AND Ehret, Jonathan AND

Mohanathasan, Chinthusa AND Bönsch, Andrea AND Kuhlen, Torsten W. AND

Schlittmeier, Sabine J. AND Fels, Janina},

journal = {PLOS ONE},

publisher = {Public Library of Science},

title = {Audiovisual angle and voice incongruence do not affect

audiovisual verbal short-term memory in virtual reality},

year = {2025},

month = {08},

volume = {20},

url = {https://doi.org/10.1371/journal.pone.0330693},

pages = {1-23},

number = {8},

}

Demo: A Latency-Optimized LLM-based Multimodal Dialogue System for Embodied Conversational Agents in VR

Interactions with Embodied Conversational Agents (ECAs) are essential in many social Virtual Reality (VR) applications, highlighting the growing demand for free-flowing, context-aware conversations supported by low-latency, multimodal ECA responses. We introduce a modular, extensible framework powered by an Large Language Model (LLM), featuring streaming-based optimization techniques specially crafted for multimodal responses. Our system is capable of controlling self-behavior and task execution, in the form of moving through the Immersive Virtual Environment (IVE) directly controlled by the LLM, and is also capable of reacting to events in the IVE. In our study, our applied optimizations achieved a latency improvement of about (66%) on average compared to having no optimizations.

» Show BibTeX

@inproceedings{Kuehlem2025,

author = {W. K\"{u}hlem, Konstantin and Ehret, Jonathan and W. Kuhlen, Torsten and B\"{o}nsch, Andrea},

title = {A Latency-Optimized LLM-based Multimodal Dialogue System for Embodied Conversational Agents in VR},

year = {2025},

isbn = {9798400715082},

publisher = {Association for Computing Machinery},

doi = {10.1145/3717511.3749287},

abstract = {Interactions with Embodied Conversational Agents (ECAs) are essential in many social Virtual Reality (VR) applications, highlighting the growing demand for free-flowing, context-aware conversations supported by low-latency, multimodal ECA responses. We introduce a modular, extensible framework powered by an Large Language Model (LLM), featuring streaming-based optimization techniques specially crafted for multimodal responses. Our system is capable of controlling self-behavior and task execution, in the form of moving through the Immersive Virtual Environment (IVE) directly controlled by the LLM, and is also capable of reacting to events in the IVE. In our study, our applied optimizations achieved a latency improvement of about (66\%) on average compared to having no optimizations.},

booktitle = {Proceedings of the 25th ACM International Conference on Intelligent Virtual Agents},

articleno = {49},

numpages = {3},

series = {IVA '25}

}

Poster: Listening Effort In Populated Audiovisual Scenes Under Plausible Room Acoustic Conditions

Listening effort in real-world environments is shaped by a complex interplay of factors, including time-varying background noise, visual and acoustic cues from both interlocutors and distractors, and the acoustic properties of the surrounding space. However, many studies investigating listening effort neglect both auditory and visual fidelity: static background noise is frequently used to avoid variability, talker visualization often disregards acoustic complexity, and experiments are commonly conducted in free-field environments without spatialized sound or realistic room acoustics. These limitations risk undermining the ecological validity of study outcomes. To address this, we developed an audiovisual virtual reality (VR) framework capable of rendering immersive, realistic scenes that integrate dynamic auditory and visual cues. Background noise included time-varying speech and non-speech sounds (e.g., conversations, appliances, traffic), spatialized in controlled acoustic environments. Participants were immersed in a visually rich VR setting populated with animated virtual agents. Listening effort was assessed using a heard-text-recall paradigm embedded in a dual-task design: participants listened to and remembered short stories told by two embodied conversational agents while simultaneously performing a vibrotactile secondary task. We compared three room acoustic conditions: a free-field environment, a room optimized for reverberation time, and an untreated reverberant room. Preliminary results from 30 participants (15 female; age range: 18–33; M = 25.1, SD = 3.05) indicated that room acoustics significantly affected both listening effort and short-term memory performance, with notable differences between free-field and reverberant conditions. These findings underscore the importance of realistic acoustic environments when investigating listening behavior in immersive audiovisual settings.

Exploring Gaze Dynamics: Initial Findings on the Role of Listening Bystanders in Conversational Interactions

This work-in-progress paper investigates how virtual listening bystanders influence participants’ gaze behavior and their perception of turn-taking during scripted conversations with embodied conversational agents (ECAs). 25 participants interacted with five ECAs – two speakers and three bystanders – across three conditions: no bystanders, bystanders exhibiting random gazing behavior, and social bystanders engaging in mutual gaze and backchanneling. Participants either observed the conversation or actively participated as speakers by reciting prompted sentences. The results indicated that bystanders reduced the participants’ attention to speakers, hindering their ability to anticipate turn changes and resulting in longer delays in shifting their gaze to the new speaker after an ECA yielded the turn. Random gazing bystanders were particularly noted for obscuring conversational flow. These findings underscore the challenges of designing effective and natural conversational environments, highlighting the need for careful consideration of ECA behaviors to enhance user engagement.

@INPROCEEDINGS{Ehret2025,

author={Ehret, Jonathan and Dasbach, Valentin and Hartmann, Jan-Nikjas and

Fels, Janina and Kuhlen, Torsten W. and Bönsch, Andrea},

booktitle={2025 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW)},

title={Exploring Gaze Dynamics: Initial Findings on the Role of Listening

Bystanders in Conversational Interactions},

year={2025},

volume={},

number={},

pages={748-752},

doi={10.1109/VRW66409.2025.00151}}

Front Matter: 9th Edition of IEEE VR Workshop: Virtual Humans and Crowds in Immersive Environments (VHCIE)

The VHCIE workshop aims to explore and advance the creation of believable virtual humans and crowds within immersive virtual environments (IVEs). With the emergence of various tools, algorithms, and systems, it is now possible to design realistic virtual characters - known as virtual agents (VAs) - that can populate expansive environments with thousands of individuals. These sophisticated crowd simulations facilitate dynamic interactions among the VAs themselves and between VAs and virtual reality (VR) users. The VHCIE workshop seeks to highlight the diverse range of VR applications for these advancements, including virtual tour guides, platforms for professional training, studies on human behavior, and even recreations of live events like concerts. By fostering discussions around these themes, VHCIE aims to inspire innovative approaches and collaborative efforts that push the boundaries of what is possible in social IVEs while also providing an open place for networking and exchanging ideas among participants. Bild: Bitte das VHCIE Logo im Anhang nutzen und klein rechts in die Ecke packen

@INPROCEEDINGS{Boensch2025,

author={Bönsch, Andrea and Chollet, Mathieu and Martin, Jordan and

Olivier, Anne-Hélène and Pettré, Julien},

booktitle={2025 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW)},

title={9th Edition of IEEE VR Workshop: Virtual Humans and Crowds in

Immersive Environments (VHCIE)},

year={2025},

volume={},

number={},

pages={703-704},

doi={10.1109/VRW66409.2025.00142}

}

Wayfinding in Immersive Virtual Environments as Social Activity Supported by Virtual Agents

Effective navigation and interaction within immersive virtual environments rely on thorough scene exploration. Therefore, wayfinding is essential, assisting users in comprehending their surroundings, planning routes, and making informed decisions. Based on real-life observations, wayfinding is, thereby, not only a cognitive process but also a social activity profoundly influenced by the presence and behaviors of others. In virtual environments, these 'others' are virtual agents (VAs), defined as anthropomorphic computer-controlled characters, who enliven the environment and can serve as background characters or direct interaction partners. However, little research has been done to explore how to efficiently use VAs as social wayfinding support. In this paper, we aim to assess and contrast user experience, user comfort, and the acquisition of scene knowledge through a between-subjects study involving n = 60 participants across three distinct wayfinding conditions in one slightly populated urban environment: (i) unsupported wayfinding, (ii) strong social wayfinding using a virtual supporter who incorporates guiding and accompanying elements while directly impacting the participants' wayfinding decisions, and (iii) weak social wayfinding using flows of VAs that subtly influence the participants' wayfinding decisions by their locomotion behavior. Our work is the first to compare the impact of VAs' behavior in virtual reality on users' scene exploration, including spatial awareness, scene comprehension, and comfort. The results show the general utility of social wayfinding support, while underscoring the superiority of the strong type. Nevertheless, further exploration of weak social wayfinding as a promising technique is needed. Thus, our work contributes to the enhancement of VAs as advanced user interfaces, increasing user acceptance and usability.

@article{Boensch2024,

title={Wayfinding in Immersive Virtual Environments as Social Activity Supported by Virtual Agents},

author={B{\"o}nsch, Andrea and Ehret, Jonathan and Rupp, Daniel and Kuhlen, Torsten W.},

journal={Frontiers in Virtual Reality},

volume={4},

year={2024},

pages={1334795},

publisher={Frontiers},

doi={10.3389/frvir.2023.1334795}

}

A Lecturer’s Voice Quality and its Effect on Memory, Listening Effort, and Perception in a VR Environment

Many lecturers develop voice problems, such as hoarseness. Nevertheless, research on how voice quality influences listeners’ perception, comprehension, and retention of spoken language is limited to a small number of audio-only experiments. We aimed to address this gap by using audio-visual virtual reality (VR) to investigate the impact of a lecturer’s hoarseness on university students’ heard text recall, listening effort, and listening impression. Fifty participants were immersed in a virtual seminar room, where they engaged in a Dual-Task Paradigm. They listened to narratives presented by a virtual female professor, who spoke in either a typical or hoarse voice. Simultaneously, participants performed a secondary task. Results revealed significantly prolonged secondary-task response times with the hoarse voice compared to the typical voice, indicating increased listening effort. Subjectively, participants rated the hoarse voice as more annoying, effortful to listen to, and impeding for their cognitive performance. No effect of voice quality was found on heard text recall, suggesting that, while hoarseness may compromise certain aspects of spoken language processing, this might not necessarily result in reduced information retention. In summary, our findings underscore the importance of promoting vocal health among lecturers, which may contribute to enhanced listening conditions in learning spaces.

» Show BibTeX

@article{Schiller2024,

author = {Isabel S. Schiller and Carolin Breuer and Lukas Aspöck and

Jonathan Ehret and Andrea Bönsch and Torsten W. Kuhlen and Janina Fels and

Sabine J. Schlittmeier},

doi = {10.1038/s41598-024-63097-6},

issn = {2045-2322},

issue = {1},

journal = {Scientific Reports},

keywords = {Audio-visual language processing,Virtual reality,Voice

quality},

month = {5},

pages = {12407},

pmid = {38811832},

title = {A lecturer’s voice quality and its effect on memory, listening

effort, and perception in a VR environment},

volume = {14},

url = {https://www.nature.com/articles/s41598-024-63097-6},

year = {2024},

}

Authentication in Immersive Virtual Environments through Gesture-Based Interaction with a Virtual Agent

Authentication poses a significant challenge in VR applications, as conventional methods, such as text input for usernames and passwords, prove cumbersome and unnatural in immersive virtual environments. Alternatives such as password managers or two-factor authentication may necessitate users to disengage from the virtual experience by removing their headsets. Consequently, we present an innovative system that utilizes virtual agents (VAs) as interaction partners, enabling users to authenticate naturally through a set of ten gestures, such as high fives, fist bumps, or waving. By combining these gestures, users can create personalized authentications akin to PINs, potentially enhancing security without compromising the immersive experience. To gain first insights into the suitability of this authentication process, we conducted a formal expert review with five participants and compared our system to a virtual keypad authentication approach. While our results show that the effectiveness of a VA-mediated gesture-based authentication system is still limited, they motivate further research in this area.

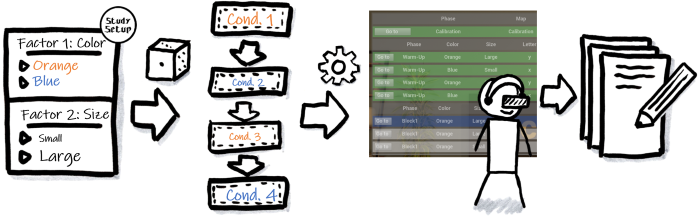

StudyFramework: Comfortably Setting up and Conducting Factorial-Design Studies Using the Unreal Engine

Setting up and conducting user studies is fundamental to virtual reality research. Yet, often these studies are developed from scratch, which is time-consuming and especially hard and error-prone for novice developers. In this paper, we introduce the StudyFramework, a framework specifically designed to streamline the setup and execution of factorial-design VR-based user studies within the Unreal Engine, significantly enhancing the overall process. We elucidate core concepts such as setup, randomization, the experimenter view, and logging. After utilizing our framework to set up and conduct their respective studies, 11 study developers provided valuable feedback through a structured questionnaire. This feedback, which was generally positive, highlighting its simplicity and usability, is discussed in detail.

» Show BibTeX

@ InProceedings{Ehret2024a,

author={Ehret, Jonathan and Bönsch, Andrea and Fels, Janina and

Schlittmeier, Sabine J. and Kuhlen, Torsten W.},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW): Workshop "Open Access Tools and Libraries for

Virtual Reality"},

title={StudyFramework: Comfortably Setting up and Conducting

Factorial-Design Studies Using the Unreal Engine},

year={2024}

}

Audiovisual Coherence: Is Embodiment of Background Noise Sources a Necessity?

Exploring the synergy between visual and acoustic cues in virtual reality (VR) is crucial for elevating user engagement and perceived (social) presence. We present a study exploring the necessity and design impact of background sound source visualizations to guide the design of future soundscapes. To this end, we immersed n = 27 participants using a head-mounted display (HMD) within a virtual seminar room with six virtual peers and a virtual female professor. Participants engaged in a dual-task paradigm involving simultaneously listening to the professor and performing a secondary vibrotactile task, followed by recalling the heard speech content. We compared three types of background sound source visualizations in a within-subject design: no visualization, static visualization, and animated visualization. Participants’ subjective ratings indicate the importance of animated background sound source visualization for an optimal coherent audiovisual representation, particularly when embedding peer-emitted sounds. However, despite this subjective preference, audiovisual coherence did not affect participants’ performance in the dual-task paradigm measuring their listening effort.

» Show BibTeX

@ InProceedings{Ehret2024b,

author={Ehret, Jonathan and Bönsch, Andrea and Schiller, Isabel S. and

Breuer, Carolin and Aspöck, Lukas and Fels, Janina and Schlittmeier, Sabine

J. and Kuhlen, Torsten W.},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW): "Workshop on Virtual Humans and Crowds in

Immersive Environments (VHCIE)"},

title={Audiovisual Coherence: Is Embodiment of Background Noise Sources a

Necessity?},

year={2024}

}

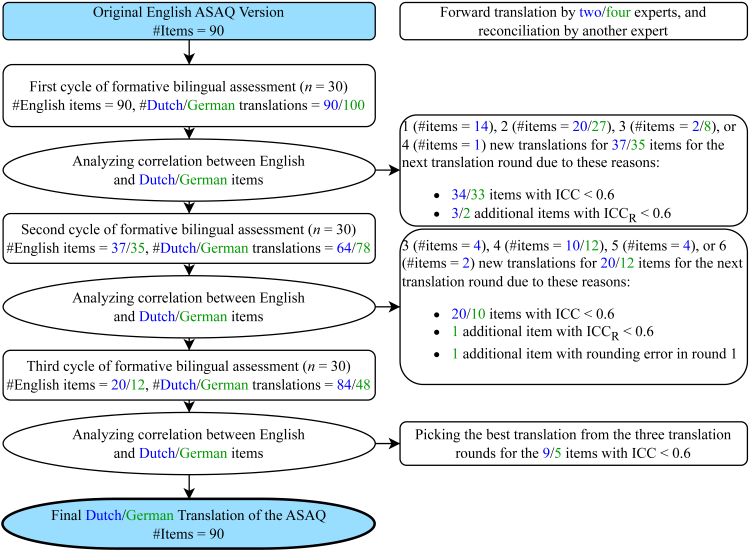

German and Dutch Translations of the Artificial-Social-Agent Questionnaire Instrument for Evaluating Human-Agent Interactions

Enabling the widespread utilization of the Artificial-Social-Agent (ASA)Questionnaire, a research instrument to comprehensively assess diverse ASA qualities while ensuring comparability, necessitates translations beyond the original English source language questionnaire. We thus present Dutch and German translations of the long and short versions of the ASA Questionnaire and describe the translation challenges we encountered. Summative assessments with 240 English-Dutch and 240 English-German bilingual participants show, on average, excellent correlations (Dutch ICC M = 0.82,SD = 0.07, range [0.58, 0.93]; German ICC M = 0.81, SD = 0.09, range [0.58,0.94]) with the original long version on the construct and dimension level. Results for the short version show, on average, good correlations (Dutch ICC M = 0.65, SD = 0.12, range [0.39, 0.82]; German ICC M = 0.67, SD = 0.14, range [0.30,0.91]). We hope these validated translations allow the Dutch and German-speaking populations to evaluate ASAs in their own language.

@InProceedings{Boensch2024,

author = { Nele Albers, Andrea Bönsch, Jonathan Ehret, Boleslav

A. Khodakov, Willem-Paul Brinkman },

booktitle = {ACM International Conference on Intelligent Virtual

Agents (IVA ’24)},

title = { German and Dutch Translations of the

Artificial-Social-Agent Questionnaire Instrument for Evaluating Human-Agent

Interactions},

year = {2024},

organization = {ACM},

pages = {4},

doi = {10.1145/3652988.3673928},

}

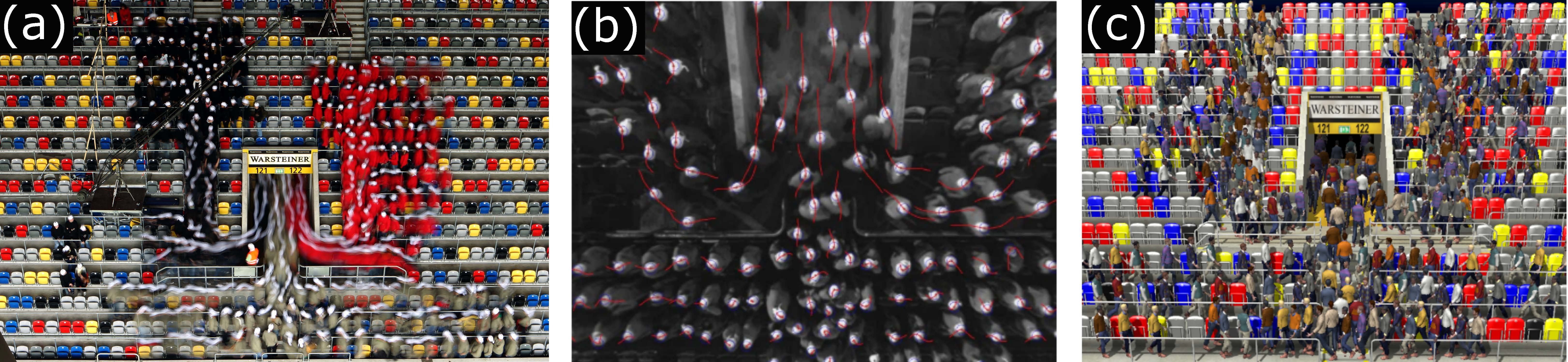

Late-Breaking Report: VR-CrowdCraft: Coupling and Advancing Research in Pedestrian Dynamics and Social Virtual Reality

VR-CrowdCraft is a newly formed interdisciplinary initiative, dedicated to the convergence and advancement of two distinct yet interconnected research fields: pedestrian dynamics (PD) and social virtual reality (VR). The initiative aims to establish foundational workflows for a systematic integration of PD data obtained from real-life experiments, encompassing scenarios ranging from smaller clusters of approximately ten individuals to larger groups comprising several hundred pedestrians, into immersive virtual environments (IVEs), addressing the following two crucial goals: (1) Advancing pedestrian dynamic analysis and (2) Advancing virtual pedestrian behavior: authentic populated IVEs and new PD experiments. The LBR presentation will focus on goal 1.

Who's next? Integrating Non-Verbal Turn-Taking Cues for Embodied Conversational Agents

Taking turns in a conversation is a delicate interplay of various signals, which we as humans can easily decipher. Embodied conversational agents (ECAs) communicating with humans should leverage this ability for smooth and enjoyable conversations. Extensive research hasanalyzed human turn-taking cues, and attempts have been made to predict turn-taking based on observed cues. These cues vary from prosodic, semantic, and syntactic modulation over adapted gesture and gaze behavior to actively used respiration. However, when generating such behavior for social robots or ECAs, often only single modalities were considered, e.g., gazing. We strive to design a comprehensive system that produces cues for all non-verbal modalities: gestures, gaze, and breathing. The system provides valuable cues without requiring speech content adaptation. We evaluated our system in a VR based user study with N = 32 participants executing two subsequent tasks. First, we asked them to listen to two ECAs taking turns in several conversations. Second, participants engaged in taking turns with one of the ECAs directly. We examined the system’s usability and the perceived social presence of the ECAs' turn-taking behavior, both with respect to each individual non-verbal modality and their interplay. While we found effects of gesture manipulation in interactions with the ECAs, no effects on social presence were found.

This work is licensed under a Creative Commons Attribution International 4.0 License

» Show BibTeX

@InProceedings{Ehret2023,

author = {Jonathan Ehret, Andrea Bönsch, Patrick Nossol, Cosima A. Ermert, Chinthusa Mohanathasan, Sabine J. Schlittmeier, Janina Fels and Torsten W. Kuhlen},

booktitle = {ACM International Conference on Intelligent Virtual Agents (IVA ’23)},

title = {Who's next? Integrating Non-Verbal Turn-Taking Cues for Embodied Conversational Agents},

year = {2023},

organization = {ACM},

pages = {8},

doi = {10.1145/3570945.3607312},

}

Effect of Head-Mounted Displays on Students’ Acquisition of Surgical Suturing Techniques Compared to an E-Learning and Tutor-Led Course: A Randomized Controlled Trial

Background: Although surgical suturing is one of the most important basic skills, many medical school graduates do not acquire sufficient knowledge of it due to its lack of integration into the curriculum or a shortage of tutors. E learning approaches attempt to address this issue but still rely on the involvement of tutors. Furthermore, the learning experience and visual spatial ability appear to play a critical role in surgical skill acquisition. Virtual reality head-mounted displays (HMDs) could address this, but the benefits of immersive and stereoscopic learning of surgical suturing techniques are still unclear.

Material and Methods: In this multi-arm randomized controlled trial, 150 novices participated. Three teaching modalities were compared: an e-learning course (monoscopic), an HMD-based course (stereoscopic, immersive), both self-directed, and a tutor-led course with feedback. Suturing performance was recorded by video camera both before and after course participation (>26 hours of video material) and assessed in a blinded fashion using the OSATS Global Rating Score (GRS). Furthermore, the optical flow of the videos was determined using an algorithm. The number of sutures performed was counted, visual spatial ability was measured with the mental rotation test (MRT), and courses were assessed with questionnaires.

Results: Students' self-assessment in the HMD-based course was comparable to that of the tutor-led course and significantly better than in the e-learning course (P=0.003). Course suitability was rated best for the tutor-led course (x=4.8), followed by the HMD-based (x=3.6) and e-learning (x=2.5) courses. The median GRS between courses was comparable (P=0.15) at 12.4 (95% CI 10.0 12.7) for the e-learning course, 14.1 (95% CI 13.0-15.0) for the HMD-based course, and 12.7 (95% CI 10.3-14.2) for the tutor-led course. However, the GRS was significantly correlated with the number of sutures performed during the training session (P=0.002), but not with visual-spatial ability (P=0.626). Optical flow (R2=0.15, P<0.001) and the number of sutures performed (R2=0.73, P<0.001) can be used as additional measures to GRS.

Conclusion: The use of HMDs with stereoscopic and immersive video provides advantages in the learning experience and should be preferred over a traditional web application for e-learning. Contrary to expectations, feedback is not necessary for novices to achieve a sufficient level in suturing; only the number of surgical sutures performed during training is a good determinant of competence improvement. Nevertheless, feedback still enhances the learning experience. Therefore, automated assessment as an alternative feedback approach could further improve self-directed learning modalities. As a next step, the data from this study could be used to develop such automated AI-based assessments.

@Article{Peters2023,

author = {Philipp Peters and Martin Lemos and Andrea Bönsch and Mark Ooms and Max Ulbrich and Ashkan Rashad and Felix Krause and Myriam Lipprandt and Torsten Wolfgang Kuhlen and Rainer Röhrig and Frank Hölzle and Behrus Puladi},

journal = {International Journal of Surgery},

title = {Effect of head-mounted displays on students' acquisition of surgical suturing techniques compared to an e-learning and tutor-led course: A randomized controlled trial},

year = {2023},

month = {may},

volume = {Publish Ahead of Print},

creationdate = {2023-05-12T11:00:37},

doi = {10.1097/js9.0000000000000464},

modificationdate = {2023-05-12T11:00:37},

publisher = {Ovid Technologies (Wolters Kluwer Health)},

}

Voice Quality and its Effects on University Students' Listening Effort in a Virtual Seminar Room

A teacher’s poor voice quality may increase listening effort in pupils, but it is unclear whether this effect persists in adult listeners. Thus, the goal of this study is to examine the impact of vocal hoarseness on university students' listening effort in a virtual seminar room. An audio-visual immersive virtual reality environment is utilized to simulate a typical seminar room with common background sounds and fellow students represented as wooden mannequins. Participants wear a head-mounted display and are equipped with two controllers to engage in a dual-task paradigm. The primary task is to listen to a virtual professor reading short texts and retain relevant content information to be recalled later. The texts are presented either in a normal or an imitated hoarse voice. In parallel, participants perform a secondary task which is responding to tactile vibration patterns via the controllers. It is hypothesized that listening to the hoarse voice induces listening effort, resulting in more cognitive resources needed for primary task performance while secondary task performance is hindered. Results are presented and discussed in light of students’ cognitive performance and listening challenges in higher education learning environments.

@INPROCEEDINGS{Schiller:977871,

author = {Schiller, Isabel Sarah and Aspöck, Lukas and Breuer,

Carolin and Ehret, Jonathan and Bönsch, Andrea and Fels,

Janina and Kuhlen, Torsten and Schlittmeier, Sabine Janina},

title = {{V}oice Quality and its Effects on University

Students' Listening Effort in a Virtual Seminar Room},

year = {2023},

month = {Dec},

date = {2023-12-04},

organization = {Acoustics 2023, Sydney (Australia), 4

Dec 2023 - 8 Dec 2023},

doi = {10.1121/10.0022982}

}

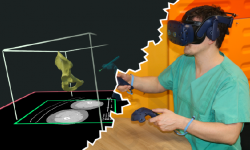

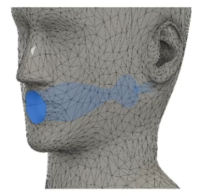

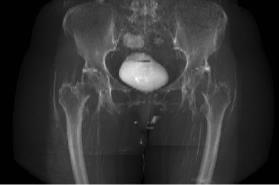

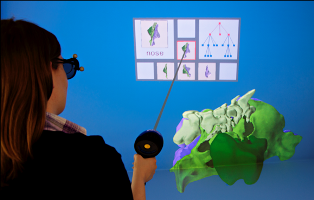

Advantages of a Training Course for Surgical Planning in Virtual Reality in Oral and Maxillofacial Surgery

Background: As an integral part of computer-assisted surgery, virtual surgical planning(VSP) leads to significantly better surgery results, such as for oral and maxillofacial reconstruction with microvascular grafts of the fibula or iliac crest. It is performed on a 2D computer desktop (DS) based on preoperative medical imaging. However, in this environment, VSP is associated with shortcomings, such as a time-consuming planning process and the requirement of a learning process. Therefore, a virtual reality VR)-based VSP application has great potential to reduce or even overcome these shortcomings due to the benefits of visuospatial vision, bimanual interaction, and full immersion. However, the efficacy of such a VR environment has not yet been investigated.

Objective: Does VR offer advantages in learning process and working speed while providing similar good results compared to a traditional DS working environment?

Methods: During a training course, novices were taught how to use a software application in a DS environment (3D Slicer) and in a VR environment (Elucis) for the segmentation of fibulae and os coxae (n = 156), and they were askedto carry out the maneuvers as accurately and quickly as possible. The individual learning processes in both environments were compared usingobjective criteria (time and segmentation performance) and self-reported questionnaires. The models resulting from the segmentation were compared mathematically (Hausdorff distance and Dice coefficient) and evaluated by two experienced radiologists in a blinded manner (score).

Results: During a training course, novices were taught how to use a software application in a DS environment (3D Slicer) and in a VR environment (Elucis)for the segmentation of fibulae and os coxae (n = 156), and they were asked to carry out the maneuvers as accurately and quickly as possible. The individual learning processes in both environments were compared using objective criteria (time and segmentation performance) and self-reported questionnaires. The models resulting from the segmentation were compared mathematically (Hausdorff distance and Dice coefficient) and evaluated by two experienced radiologists in a blinded manner (score).

Conclusions: The more rapid learning process and the ability to work faster in the VR environment could save time and reduce the VSP workload, providing certain advantages over the DS environment.

@article{Ulbrich2022,

title={Advantages of a Training Course for Surgical Planning in Virtual

Reality in Oral and Maxillofacial Surgery },

author={ Ulbrich, M., Van den Bosch, V., Bönsch, A., Gruber, L.J., Ooms,

M., Melchior, C., Motmaen, I., Wilpert, C., Rashad, A., Kuhlen, T.W.,

Hölzle, F., Puladi, B.},

journal={JMIR Serious Games},

volume={ 28/11/2022:40541 (forthcoming/in press) },

year={2022},

publisher={JMIR Publications Inc., Toronto, Canada}

}

Poster: Enhancing Proxy Localization in World in Miniatures Focusing on Virtual Agents

Virtual agents (VAs) are increasingly utilized in large-scale architectural immersive virtual environments (LAIVEs) to enhance user engagement and presence. However, challenges persist in effectively localizing these VAs for user interactions and optimally orchestrating them for an interactive experience. To address these issues, we propose to extend world in miniatures (WIMs) through different localization and manipulation techniques as these 3D miniature scene replicas embedded within LAIVEs have already demonstrated effectiveness for wayfinding, navigation, and object manipulation. The contribution of our ongoing research is thus the enhancement of manipulation and localization capabilities within WIMs, focusing on the use case of VAs.

@InProceedings{Boensch2023c,

author = {Andrea Bönsch, Radu-Andrei Coanda, and Torsten W.

Kuhlen},

booktitle = {{V}irtuelle und {E}rweiterte {R}ealit\"at, 14.

{W}orkshop der {GI}-{F}achgruppe {VR}/{AR}},

title = {Enhancing Proxy Localization in World in

Miniatures Focusing on Virtual Agents},

year = {2023},

organization = {Gesellschaft für Informatik e.V.},

doi = {10.18420/vrar2023_3381}

}

Poster: Whom Do You Follow? Pedestrian Flows Constraining the User’s Navigation during Scene Exploration

In this work-in-progress, we strive to combine two wayfinding techniques supporting users in gaining scene knowledge, namely (i) the River Analogy, in which users are considered as boats automatically floating down predefined rivers, e.g., streets in an urban scene, and (ii) virtual pedestrian flows as social cues indirectly guiding users through the scene. In our combined approach, the pedestrian flows function as rivers. To navigate through the scene, users leash themselves to a pedestrian of choice, considered as boat, and are dragged along the flow towards an area of interest. Upon arrival, users can detach themselves to freely explore the site without navigational constraints. We briefly outline our approach, and discuss the results of an initial study focusing on various leashing visualizations.

@InProceedings{Boensch2023b,

author = {Andrea Bönsch, Lukas B. Zimmermann, Jonathan Ehret, and Torsten W.Kuhlen},

booktitle = {ACM International Conferenceon Intelligent Virtual Agents (IVA ’23)},

title = {Whom Do You Follow? Pedestrian Flows Constraining the User’sNavigation during Scene Exploration},

year = {2023},

organization = {ACM},

pages = {3},

doi = {10.1145/3570945.3607350},

}

Poster: Where Do They Go? Overhearing Conversing Pedestrian Groups during Scene Exploration

On entering an unknown immersive virtual environment, a user’s first task is gaining knowledge about the respective scene, termed scene exploration. While many techniques for aided scene exploration exist, such as virtual guides, or maps, unaided wayfinding through pedestrians-as-cues is still in its infancy. We contribute to this research by indirectly guiding users through pedestrian groups conversing about their target location. A user who overhears the conversation without being a direct addressee can consciously decide whether to follow the group to reach an unseen point of interest. We outline our approach and give insights into the results of a first feasibility study in which we compared our new approach to non-talkative groups and groups conversing about random topics.

@InProceedings{Boensch2023a,

author = {Andrea Bönsch, Till Sittart, Jonathan Ehret, and Torsten W. Kuhlen},

booktitle = {ACM International Conference on Intelligent VirtualAgents (IVA ’23)},

title = {Where Do They Go? Overhearing Conversing Pedestrian Groups duringScene Exploration},

year = {2023},

pages = {3},

publisher = {ACM},

doi = {10.1145/3570945.3607351},

}

Poster: Hoarseness among university professors and how it can influence students’ listening impression: an audio-visual immersive VR study

For university students, following a lecture can be challenging when room acoustic conditions are poor or when their professor suffers from a voice disorder. Related to the high vocal demands of teaching, university professors develop voice disorders quite frequently. The key symptom is hoarseness. The aim of this study is to investigate the effect of hoarseness on university students’ subjective listening effort and listening impression using audio-visual immersive virtual reality (VR) including a real-time room simulation of a typical seminar room. Equipped with a head-mounted display, participants are immersed in the virtual seminar room, with typical binaural background sounds, where they perform a listening task. This task involves comprehending and recalling information from text, read aloud by a female virtual professor positioned in front of the seminar room. Texts are presented in two experimental blocks, one of them read aloud in a normal (modal) voice, the other one in a hoarse voice. After each block, participants fill out a questionnaire to evaluate their perceived listening effort and overall listening impression under the respective voice quality, as well as the human-likeliness of and preferences towards the virtual professor. Results are presented and discussed regarding voice quality design for virtual tutors and potential impli-cations for students’ motivation and performance in academic learning spaces.

@InProceedings{Schiller2023Audictive,

author = {Isabel S. Schiller, Lukas Aspöck, Carolin Breuer,

Jonathan Ehret and Andrea Bönsch},

booktitle = {Proceedings of the 1st AUDICTIVE Conference},

title = {Hoarseness among university professors and how it can

influence students’ listening impression: an audio-visual immersive VR

study},

year = {2023},

pages = {134-137},

doi = { 10.18154/RWTH-2023-08885},

}

Does a Talker's Voice Quality Affect University Students' Listening Effort in a Virtual Seminar Room?

A university professor's voice quality can either facilitate or impede effective listening in students. In this study, we investigated the effect of hoarseness on university students’ listening effort in seminar rooms using audio-visual virtual reality (VR). During the experiment, participants were immersed in a virtual seminar room with typical background sounds and performed a dual-task paradigm involving listening to and answering questions about short stories, narrated by a female virtual professor, while responding to tactile vibration patterns. In a within-subject design, the professor's voice quality was varied between normal and hoarse. Listening effort was assessed based on performance and response time measures in the dual-task paradigm and participants’ subjective evaluation. It was hypothesized that listening to a hoarse voice leads to higher listening effort. While the analysis is still ongoing, our preliminary results show that listening to the hoarse voice significantly increased perceived listening effort. In contrast, the effect of voice quality was not significant in the dual-task paradigm. These findings indicate that, even if students' performance remains unchanged, listening to hoarse university professors may still require more effort.

@INBOOK{Schiller:977866,

author = {Schiller, Isabel Sarah and Bönsch, Andrea and Ehret,

Jonathan and Breuer, Carolin and Aspöck, Lukas},

title = {{D}oes a talker's voice quality affect university

students' listening effort in a virtual seminar room?},

address = {Turin},

publisher = {European Acoustics Association},

pages = {2813-2816},

year = {2024},

booktitle = {Proceedings of the 10th Convention of

the European Acoustics Association :

Forum Acusticum 2023. Politecnico di

Torino, Torino, Italy, September 11 -

15, 2023 / Editors: Arianna Astolfi,

Francesco Asdrudali, Louena Shtrepi},

month = {Sep},

date = {2023-09-11},

organization = {10. Convention of the European

Acoustics Association : Forum

Acusticum, Turin (Italy), 11 Sep 2023 -

15 Sep 2023},

doi = {10.61782/fa.2023.0320},

}

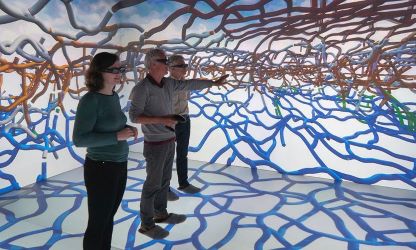

Quantitative Mapping of Keratin Networks in 3D

Mechanobiology requires precise quantitative information on processes taking place in specific 3D microenvironments. Connecting the abundance of microscopical, molecular, biochemical, and cell mechanical data with defined topologies has turned out to be extremely difficult. Establishing such structural and functional 3D maps needed for biophysical modeling is a particular challenge for the cytoskeleton, which consists of long and interwoven filamentous polymers coordinating subcellular processes and interactions of cells with their environment. To date, useful tools are available for the segmentation and modeling of actin filaments and microtubules but comprehensive tools for the mapping of intermediate filament organization are still lacking. In this work, we describe a workflow to model and examine the complete 3D arrangement of the keratin intermediate filament cytoskeleton in canine, murine, and human epithelial cells both, in vitro and in vivo. Numerical models are derived from confocal Airyscan high-resolution 3D imaging of fluorescence-tagged keratin filaments. They are interrogated and annotated at different length scales using different modes of visualization including immersive virtual reality. In this way, information is provided on network organization at the subcellular level including mesh arrangement, density, and isotropic configuration as well as details on filament morphology such as bundling, curvature, and orientation. We show that the comparison of these parameters helps to identify, in quantitative terms, similarities and differences of keratin network organization in epithelial cell types defining subcellular domains, notably basal, apical, lateral, and perinuclear systems. The described approach and the presented data are pivotal for generating mechanobiological models that can be experimentally tested.

@article {Windoffer2022,

article_type = {journal},

title = {{Quantitative Mapping of Keratin Networks in 3D}},

author = {Windoffer, Reinhard and Schwarz, Nicole and Yoon, Sungjun and Piskova, Teodora and Scholkemper, Michael and Stegmaier, Johannes and Bönsch, Andrea and Di Russo, Jacopo and Leube, Rudolf},

editor = {Coulombe, Pierre},

volume = 11,

year = 2022,

month = {feb},

pub_date = {2022-02-18},

pages = {e75894},

citation = {eLife 2022;11:e75894},

doi = {10.7554/eLife.75894},

url = {https://doi.org/10.7554/eLife.75894},

journal = {eLife},

issn = {2050-084X},

publisher = {eLife Sciences Publications, Ltd},

}

Late-Breaking Report: Natural Turn-Taking with Embodied Conversational Agents

Adding embodied conversational agents (ECAs) to immersive virtual environments (IVEs) becomes relevant in various application scenarios, for example, conversational systems. For successful interactions with these ECAs, they have to behave naturally, i.e. in the way a user would expect a real human to behave. Teaming up with acousticians and psychologists, we strive to explore turn-taking in VR-based interactions between either two ECAs or an ECA and a human user.

Late-Breaking Report: An Embodied Conversational Agent Supporting Scene Exploration by Switching between Guiding and Accompanying

In this late-breaking report, we first motivate the requirement of an embodied conversational agent (ECA) who combines characteristics of a virtual tour guide and a knowledgeable companion in order to allow users an interactive and adaptable, however, structured exploration of an unknown immersive, architectural environment. Second, we roughly outline our proposed ECA’s behavioral design followed by a teaser on the planned user study.

Do Prosody and Embodiment Influence the Perceived Naturalness of Conversational Agents' Speech?

presented at ACM Symposium on Applied Perception (SAP)

For conversational agents’ speech, all possible sentences have to be either prerecorded by voice actors or the required utterances can be synthesized. While synthesizing speech is more flexible and economic in production, it also potentially reduces the perceived naturalness of the agents amongst others due to mistakes at various linguistic levels. In our paper, we are interested in the impact of adequate and inadequate prosody, here particularly in terms of accent placement, on the perceived naturalness and aliveness of the agents. We compare (i) inadequate prosody, as generated by off-the-shelf text-to-speech (TTS) engines with synthetic output, (ii) the same inadequate prosody imitated by trained human speakers and (iii) adequate prosody produced by those speakers. The speech was presented either as audio-only or by embodied, anthropomorphic agents, to investigate the potential masking effect by a simultaneous visual representation of those virtual agents. To this end, we conducted an online study with 40 participants listening to four different dialogues each presented in the three Speech levels and the two Embodiment levels. Results confirmed that adequate prosody in human speech is perceived as more natural (and the agents are perceived as more alive) than inadequate prosody in both human (ii) and synthetic speech (i). Thus, it is not sufficient to just use a human voice for an agent’s speech to be perceived as natural - it is decisive whether the prosodic realisation is adequate or not. Furthermore, and surprisingly, we found no masking effect by speaker embodiment, since neither a human voice with inadequate prosody nor a synthetic voice was judged as more natural, when a virtual agent was visible compared to the audio-only condition. On the contrary, the human voice was even judged as less “alive” when accompanied by a virtual agent. In sum, our results emphasize on the one hand the importance of adequate prosody for perceived naturalness, especially in terms of accents being placed on important words in the phrase, while showing on the other hand that the embodiment of virtual agents plays a minor role in naturalness ratings of voices.

» Show BibTeX

@article{Ehret2021a,

author = {Ehret, Jonathan and B\"{o}nsch, Andrea and Asp\"{o}ck, Lukas and R\"{o}hr, Christine T. and Baumann, Stefan and Grice, Martine and Fels, Janina and Kuhlen, Torsten W.},

title = {Do Prosody and Embodiment Influence the Perceived Naturalness of Conversational Agents’ Speech?},

journal = {ACM transactions on applied perception},

year = {2021},

issue_date = {October 2021},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {18},

number = {4},

articleno = {21},

issn = {1544-3558},

url = {https://doi.org/10.1145/3486580},

doi = {10.1145/3486580},

numpages = {15},

keywords = {speech, audio, accentuation, prosody, text-to-speech, Embodied conversational agents (ECAs), virtual acoustics, embodiment}

}

Being Guided or Having Exploratory Freedom: User Preferences of a Virtual Agent’s Behavior in a Museum

A virtual guide in an immersive virtual environment allows users a structured experience without missing critical information. However, although being in an interactive medium, the user is only a passive listener, while the embodied conversational agent (ECA) fulfills the active roles of wayfinding and conveying knowledge. Thus, we investigated for the use case of a virtual museum, whether users prefer a virtual guide or a free exploration accompanied by an ECA who imparts the same information compared to the guide. Results of a small within-subjects study with a head-mounted display are given and discussed, resulting in the idea of combining benefits of both conditions for a higher user acceptance. Furthermore, the study indicated the feasibility of the carefully designed scene and ECA’s appearance.

We also submitted a GALA video entitled "An Introduction to the World of Internet Memes by Curator Kate: Guiding or Accompanying Visitors?" by D. Hashem, A. Bönsch, J. Ehret, and T.W. Kuhlen, showcasing our application.

IVA 2021 GALA Audience Award!

» Show BibTeX

@inproceedings{Boensch2021b,

author = {B\"{o}nsch, Andrea and Hashem, David and Ehret, Jonathan and Kuhlen, Torsten W.},

title = {{Being Guided or Having Exploratory Freedom: User Preferences of a Virtual Agent's Behavior in a Museum}},

year = {2021},

isbn = {9781450386197},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3472306.3478339},

doi = {10.1145/3472306.3478339},

booktitle = {{Proceedings of the 21th ACM International Conference on Intelligent Virtual Agents}},

pages = {33–40},

numpages = {8},

keywords = {virtual agents, enjoyment, guiding, virtual reality, free exploration, museum, embodied conversational agents},

location = {Virtual Event, Japan},

series = {IVA '21}

}

Poster: Indircet User Guidance by Pedestrians in Virtual Environments

Scene exploration allows users to acquire scene knowledge on entering an unknown virtual environment. To support users in this endeavor, aided wayfinding strategies intentionally influence the user’s wayfinding decisions through, e.g., signs or virtual guides.

Our focus, however, is an unaided wayfinding strategy, in which we use virtual pedestrians as social cues to indirectly and subtly guide users through virtual environments during scene exploration. We shortly outline the required pedestrians’ behavior and results of a first feasibility study indicating the potential of the general approach.

» Show BibTeX

@inproceedings {Boensch2021a,

booktitle = {ICAT-EGVE 2021 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments - Posters and Demos},

editor = {Maiero, Jens and Weier, Martin and Zielasko, Daniel},

title = {{Indirect User Guidance by Pedestrians in Virtual Environments}},

author = {Bönsch, Andrea and Güths, Katharina and Ehret, Jonathan and Kuhlen, Torsten W.},

year = {2021},

publisher = {The Eurographics Association},

ISSN = {1727-530X},

ISBN = {978-3-03868-159-5},

DOI = {10.2312/egve.20211336}

}

Poster: Prosodic and Visual Naturalness of Dialogs Presented by Conversational Virtual Agents

Conversational virtual agents, with and without visual representation, are becoming more present in our daily life, e.g. as intelligent virtual assistants on smart devices. To investigate the naturalness of both the speech and the nonverbal behavior of embodied conversational agents (ECAs), an interdisciplinary research group was initiated, consisting of phoneticians, computer scientists, and acoustic engineers. For a web-based pilot experiment, simple dialogs between a male and a female speaker were created, with three prosodic conditions. For condition 1, the dialog was created synthetically using a text-to-speech engine. In the other two prosodic conditions (2,3) human speakers were recorded with 2) the erroneous accentuation of the text-to-speech synthesis of condition 1, and 3) with a natural accentuation. Face tracking data of the recorded speakers was additionally obtained and applied as input data for the facial animation of the ECAs. Based on the recorded data, auralizations in a virtual acoustic environment were generated and presented as binaural signals to the participants either in combination with the visual representation of the ECAs as short videos or without any visual feedback. A preliminary evaluation of the participants’ responses to questions related to naturalness, presence, and preference is presented in this work.

@inproceedings{Aspoeck2021,

author = {Asp\"{o}ck, Lukas and Ehret, Jonathan and Baumann, Stefan and B\"{o}nsch, Andrea and R\"{o}hr, Christine T. and Grice, Martine and Kuhlen, Torsten W. and Fels, Janina},

title = {Prosodic and Visual Naturalness of Dialogs Presented by Conversational Virtual Agents},

year = {2021},

note = {Hybride Konferenz},

month = {Aug},

date = {2021-08-15},

organization = {47. Jahrestagung für Akustik, Wien (Austria), 15 Aug 2021 - 18 Aug 2021},

url = {https://vr.rwth-aachen.de/publication/02207/}

}

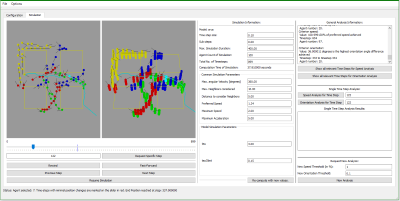

Talk: Numerical Analysis of Keratin Networks in Selected Cell Types

Keratin intermediate filaments make up the main intracellular cytoskeletal network of epithelia and provide, together with their associated desmosomal cell-cell adhesions, mechanical resilience. Remarkable differences in keratin network topology have been noted in different epithelial cell types ranging from a well-defined subapical network in enterocytes to pancytoplasmic networks in keratinocytes. In addition, functional states and biophysical, biochemical, and microbial stress have been shown to affect network organization. To gain insight into the importance of network topology for cellular function and resilience, quantification of 3D keratin network topology is needed.

We used Airyscan superresolution microscopy to record image stacks with an x/y resolution of 120 nm and axial resolution of 350 nm in canine kidney-derived MDCK cells, human epidermal keratinocytes, and murine retinal pigment epithelium (RPE) cells. Established segmentation algorithms (TSOAX) were implemented in combination with additional analysis tools to create a numerical representation of the keratin network topology in the different cell types. The resulting representation contains the XYZ position of all filament segment vertices together with data on filament thickness and information on the connecting nodes. This allows the statistical analysis of network parameters such as length, density, orientation, and mesh size. Furthermore, the network can be rendered in standard 3D software, which makes it accessible at hitherto unattained quality in 3D. Comparison of the three analyzed cell types reveals significant numerical differences in various parameters.

Listening to, and remembering conversations between two talkers: Cognitive research using embodied conversational agents in audiovisual virtual environments

In the AUDICTIVE project about listening to, and remembering the content of conversations between two talkers we aim to investigate the combined effects of potentially performance-relevant but scarcely addressed audiovisual cues on memory and comprehension for running speech. Our overarching methodological approach is to develop an audiovisual Virtual Reality testing environment that includes embodied Virtual Agents (VAs). This testing environment will be used in a series of experiments to research the basic aspects of audiovisual cognitive performance in a close(r)-to-real-life setting. We aim to provide insights into the contribution of acoustical and visual cues on the cognitive performance, user experience, and presence as well as quality and vibrancy of VR applications, especially those with a social interaction focus. We will study the effects of variations in the audiovisual ’realism’ of virtual environments on memory and comprehension of multi-talker conversations and investigate how fidelity characteristics in audiovisual virtual environments contribute to the realism and liveliness of social VR scenarios with embodied VAs. Additionally, we will study the suitability of text memory, comprehension measures, and subjective judgments to assess the quality of experience of a VR environment. First steps of the project with respect to the general idea of AUDICTIVE are presented.

@ inproceedings {Fels2021,

author = {Fels, Janina and Ermert, Cosima A. and Ehret, Jonathan and Mohanathasan, Chinthusa and B\"{o}nsch, Andrea and Kuhlen, Torsten W. and Schlittmeier, Sabine J.},

title = {Listening to, and Remembering Conversations between Two Talkers: Cognitive Research using Embodied Conversational Agents in Audiovisual Virtual Environments},

address = {Berlin},

publisher = {Deutsche Gesellschaft für Akustik e.V. (DEGA)},

pages = {1328-1331},

year = {2021},

booktitle = {[Fortschritte der Akustik - DAGA 2021, DAGA 2021, 2021-08-15 - 2021-08-18, Wien, Austria]},

month = {Aug},

date = {2021-08-15},

organization = {47. Jahrestagung für Akustik, Wien (Austria), 15 Aug 2021 - 18 Aug 2021},

url = {https://vr.rwth-aachen.de/publication/02206/}

}

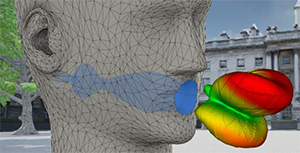

Talk: Speech Source Directivity for Embodied Conversational Agents

Embodied conversational agents (ECAs) are computer-controlled characters who communicate with a human using natural language. Being represented as virtual humans, ECAs are often utilized in domains such as training, therapy, or guided tours while being embedded in an immersive virtual environment. Having plausible speech sound is thereby desirable to improve the overall plausibility of these virtual-reality-based simulations. In an audiovisual VR experiment, we investigated the impact of directional radiation for the produced speech on the perceived naturalism. Furthermore, we examined how directivity filters influence the perceived social presence of participants in interactions with an ECA. Therefor we varied the source directivity between 1) being omnidirectional, 2) featuring the average directionality of a human speaker, and 3) dynamically adapting to the currently produced phonemes. Our results indicate that directionality of speech is noticed and rated as more natural. However, no significant change of perceived naturalness could be found when adding dynamic, phoneme-dependent directivity. Furthermore, no significant differences on social presence were measurable between any of the three conditions.

» Show BibTeX

Bibtex:

@misc{Ehret2021b,

author = {Ehret, Jonathan and Aspöck, Lukas and B\"{o}nsch, Andrea and Fels, Janina and Kuhlen, Torsten W.},

title = {Speech Source Directivity for Embodied Conversational Agents},

publisher = {IHTA, Institute for Hearing Technology and Acoustics},

year = {2021},

note = {Hybride Konferenz},

month = {Aug},

date = {2021-08-15},

organization = {47. Jahrestagung für Akustik, Wien (Austria), 15 Aug 2021 - 18 Aug 2021},

subtyp = {Video},

url = {https://vr.rwth-aachen.de/publication/02205/}

}

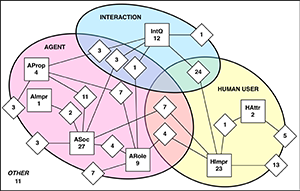

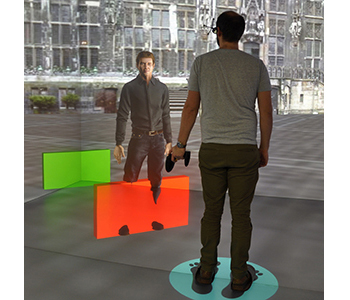

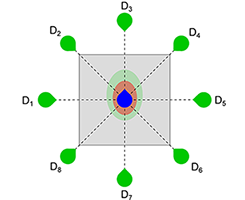

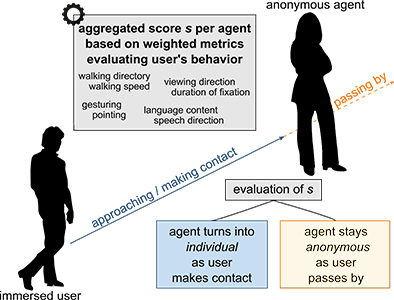

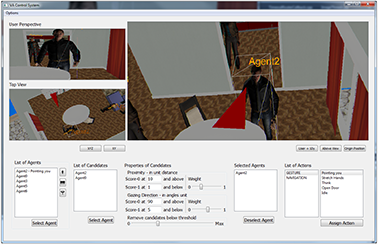

Inferring a User’s Intent on Joining or Passing by Social Groups

Modeling the interactions between users and social groups of virtual agents (VAs) is vital in many virtual-reality-based applications. However, only little research on group encounters has been conducted yet. We intend to close this gap by focusing on the distinction between joining and passing-by a group. To enhance the interactive capacity of VAs in these situations, knowing the user’s objective is required to showreasonable reactions. To this end,we propose a classification scheme which infers the user’s intent based on social cues such as proxemics, gazing and orientation, followed by triggering believable, non-verbal actions on the VAs.We tested our approach in a pilot study with overall promising results and discuss possible improvements for further studies.

» Show BibTeX

@inproceedings{10.1145/3383652.3423862,

author = {B\"{o}nsch, Andrea and Bluhm, Alexander R. and Ehret, Jonathan and Kuhlen, Torsten W.},

title = {Inferring a User's Intent on Joining or Passing by Social Groups},

year = {2020},

isbn = {9781450375863},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3383652.3423862},

doi = {10.1145/3383652.3423862},

abstract = {Modeling the interactions between users and social groups of virtual agents (VAs) is vital in many virtual-reality-based applications. However, only little research on group encounters has been conducted yet. We intend to close this gap by focusing on the distinction between joining and passing-by a group. To enhance the interactive capacity of VAs in these situations, knowing the user's objective is required to show reasonable reactions. To this end, we propose a classification scheme which infers the user's intent based on social cues such as proxemics, gazing and orientation, followed by triggering believable, non-verbal actions on the VAs. We tested our approach in a pilot study with overall promising results and discuss possible improvements for further studies.},

booktitle = {Proceedings of the 20th ACM International Conference on Intelligent Virtual Agents},

articleno = {10},

numpages = {8},

keywords = {virtual agents, joining a group, social groups, virtual reality},

location = {Virtual Event, Scotland, UK},

series = {IVA '20}

}

Evaluating the Influence of Phoneme-Dependent Dynamic Speaker Directivity of Embodied Conversational Agents’ Speech

Generating natural embodied conversational agents within virtual spaces crucially depends on speech sounds and their directionality. In this work, we simulated directional filters to not only add directionality, but also directionally adapt each phoneme. We therefore mimic reality where changing mouth shapes have an influence on the directional propagation of sound. We conducted a study (n = 32) evaluating naturalism ratings, preference and distinguishability of omnidirectional speech auralization compared to static and dynamic, phoneme-dependent directivities. The results indicated that participants cannot distinguish dynamic from static directivity. Furthermore, participants’ preference ratings aligned with their naturalism ratings. There was no unanimity, however, with regards to which auralization is the most natural.

» Show BibTeX

@inproceedings{10.1145/3383652.3423863,

author = {Ehret, Jonathan and Stienen, Jonas and Brozdowski, Chris and B\"{o}nsch, Andrea and Mittelberg, Irene and Vorl\"{a}nder, Michael and Kuhlen, Torsten W.},

title = {Evaluating the Influence of Phoneme-Dependent Dynamic Speaker Directivity of Embodied Conversational Agents' Speech},

year = {2020},

isbn = {9781450375863},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3383652.3423863},

doi = {10.1145/3383652.3423863},

abstract = {Generating natural embodied conversational agents within virtual spaces crucially depends on speech sounds and their directionality. In this work, we simulated directional filters to not only add directionality, but also directionally adapt each phoneme. We therefore mimic reality where changing mouth shapes have an influence on the directional propagation of sound. We conducted a study (n = 32) evaluating naturalism ratings, preference and distinguishability of omnidirectional speech auralization compared to static and dynamic, phoneme-dependent directivities. The results indicated that participants cannot distinguish dynamic from static directivity. Furthermore, participants' preference ratings aligned with their naturalism ratings. There was no unanimity, however, with regards to which auralization is the most natural.},

booktitle = {Proceedings of the 20th ACM International Conference on Intelligent Virtual Agents},

articleno = {17},

numpages = {8},

keywords = {phoneme-dependent directivity, directional 3D sound, speech, embodied conversational agents, virtual acoustics},

location = {Virtual Event, Scotland, UK},

series = {IVA '20}

}

The Impact of a Virtual Agent’s Non-Verbal Emotional Expression on a User’s Personal Space Preferences

Virtual-reality-based interactions with virtual agents (VAs) are likely subject to similar influences as human-human interactions. In either real or virtual social interactions, interactants try to maintain their personal space (PS), an ubiquitous, situative, flexible safety zone. Building upon larger PS preferences to humans and VAs with angry facial expressions, we extend the investigations to whole-body emotional expressions. In two immersive settings–HMD and CAVE–66 males were approached by an either happy, angry, or neutral male VA. Subjects preferred a larger PS to the angry VA when being able to stop him at their convenience (Sample task), replicating previous findings, and when being able to actively avoid him (PassBy task). In the latter task, we also observed larger distances in the CAVE than in the HMD.

» Show BibTeX

@inproceedings{10.1145/3383652.3423888,

author = {B\"{o}nsch, Andrea and Radke, Sina and Ehret, Jonathan and Habel, Ute and Kuhlen, Torsten W.},

title = {The Impact of a Virtual Agent's Non-Verbal Emotional Expression on a User's Personal Space Preferences},

year = {2020},

isbn = {9781450375863},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3383652.3423888},

doi = {10.1145/3383652.3423888},

abstract = {Virtual-reality-based interactions with virtual agents (VAs) are likely subject to similar influences as human-human interactions. In either real or virtual social interactions, interactants try to maintain their personal space (PS), an ubiquitous, situative, flexible safety zone. Building upon larger PS preferences to humans and VAs with angry facial expressions, we extend the investigations to whole-body emotional expressions. In two immersive settings-HMD and CAVE-66 males were approached by an either happy, angry, or neutral male VA. Subjects preferred a larger PS to the angry VA when being able to stop him at their convenience (Sample task), replicating previous findings, and when being able to actively avoid him (Pass By task). In the latter task, we also observed larger distances in the CAVE than in the HMD.},

booktitle = {Proceedings of the 20th ACM International Conference on Intelligent Virtual Agents},

articleno = {12},

numpages = {8},

keywords = {personal space, virtual reality, emotions, virtual agents},

location = {Virtual Event, Scotland, UK},

series = {IVA '20}

}

The 19 Unifying Questionnaire Constructs of Artificial Social Agents: An IVA Community Analysis